Welcome to 2007! The

webmaster central team is very excited about our plans for this year, but we thought we'd take a moment to reflect on 2006. We had a great year building communication with you, the webmaster community, and creating tools based on your feedback. Many on the team were able to come out to conferences and met some of you in person, and we're looking forward to meeting many more of you in 2007. We've also had great conversations and gotten valuable feedback in our

discussion forum, and we hope this blog has been helpful in providing information to you.

We said goodbye to the

Sitemaps blog and launched this broader blog in August. And after doing so, our number of unique monthly visitors more than doubled. Thanks! We got much of our non-Google traffic from other webmaster community blogs and forums, such as the

Search Engine Watch blog,

Google Blogoscoped, and

WebmasterWorld. In December,

seomoz.org and the new

Searchengineland.com were our biggest non-Google referrers. And social networking sites such as

digg.com,

reddit,com,

del.icio.us, and

slashdot.org sent webmaster tools many of our visitors, and a blog by somebody named

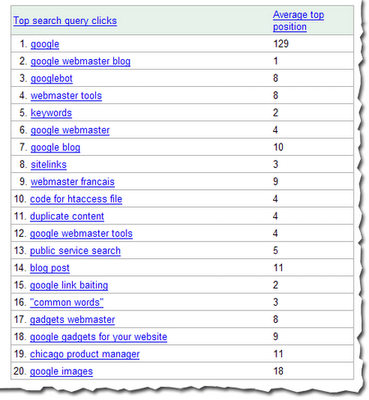

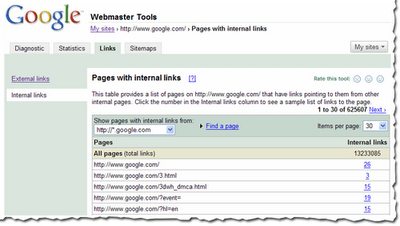

Matt Cutts sent a lot of referrers our way as well. And these are the top Google queries that visitors clicked on:

Our most popular post was about the

Googlebot activity reports and crawl rate control that we launched in October, followed by details about

how to authenticate Googlebot. We have only slightly more Firefox users (46.28%) than Internet Explorer users (46.25%). 89% of you use Windows. After English, our readers most commonly speak French, German, Japanese, and Spanish. And after the United States, our readers primarily come from the UK, Canada, Germany, and France.

Here's some of what we did last year.

JanuaryWe expanded into

Swedish, Danish, Norwegian, and Finnish.

You could hear

Matt on webmaster radio.

FebruaryWe lauched

several new features, including:

- robots.txt analysis tool

- page with the highest PageRank by month

- common words in your site's content and in anchor text to your site

We met many of you at the

Google Sitemaps lunch at SES NY.You could hear

me on webmaster radio.

MarchWe launched

a few more features, including:

- showing the top position of your site for your top queries

- top mobile queries

- download options for Sitemaps data, stats, and errors

AprilWe got a whole new look and

added yet more features, such as:

- meta tag verification

- notification of violations to the webmaster guidelines

- reinclusion request form and spam reporting form

- indexing information (can we crawl your home page? is your site indexed?)

We also added a comprehensive

webmaster help center and expanded the

webmaster guidelines from 10 languages to 18.

We met more of you at the

Google Sitemaps lunch at Boston Pubcon.

Matt talked about the

new caching proxy.

We talked to many of you at

SES Toronto.

May

Matt introduced you to our new search evangelist,

Adam Lasnik.

We hung out with some of you in our hometown at

Search Engine Watch Live Seattle and over at

SES London.

JuneWe launched user surveys, to learn more about how you interact with webmaster tools.

We

expanded some of our features, such as:

- increased the number of crawl errors shown to 100% within the last two weeks

- Increased the number of Sitemaps you can submit from 200 to 500

- Expanded query stats so you can see them per property and per country and made them available for subdirectories

- Increased the number of common words in your site and in links to your site from 20 to 75

- Added Adsbot-Google to the robots.txt analysis tool

Yahoo! Stores incorporated Sitemaps for their merchants.

JulyWe expanded into

Polish.

We began supporting the <meta name="robots" content="noodpt"> tag to allow you to

opt out of using Open Directory titles and descriptions for your site in the search results.

We had a great time talking to many of you about international issues at

SES Latino in Miami.

AugustAugust was an exciting month for us, as we l

aunched webmaster central! As part of that, we renamed Google Sitemaps to webmaster tools, expanded our Google Group to include all types of webmaster topics, and expanded the help content in our webmaster help center. We also

launched some new features, including:

- Preferred domain control

- Site verification management

- Downloads of query stats for all subfolders

In addition, I took over the GoodKarma podcast on webmasterradio for

two shows (one all about

Buffy the Vampire Slayer!) and we met even more of you at the

Google Webmaster Central lunch at SES San Jose.

SeptemberWe

improved reporting of the cache date in search results.

We provided a way for you to

authenticate Googlebot.

And we started

updating query stats more often and for a shorter timeframe.

OctoberWe launched

several new features, such as:

- Crawl rate control

- Googlebot activity reports

- Opting in to enhanced image search

- Display of the number of URLs submitted via a Sitemap

And you could hear Matt being interviewed in a

podcast.NovemberWe launched

sitemaps.org, for

joint support of the Sitemaps protocol between us, Yahoo!, and Microsoft.

We also started notifying you if we

flagged your site for badware and if you're an English news publisher included in Google News, we made News Sitemaps available to you.

Partied with lots of you at "Safe Bets with Google" at

Pubcon Las Vegas.

We introduced you to our new Sitemaps support engineer,

Maile Ohye, and our first webmaster trends analyst,

Jonathan Simon.

DecWe met even more of you at the webmaster central lunch at

SES Chicago.

Thanks for spending the year with us. We look forward to even more collaboration and communication in the coming year.

.jpg)