Webmaster level: all

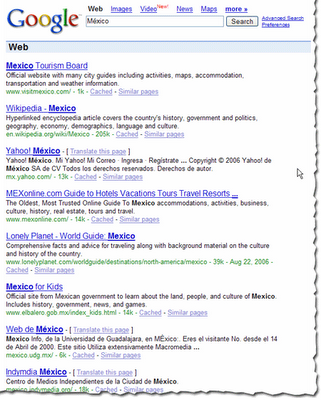

Users often turn to Google to answer a quick question, but research suggests that up to 10% of users’ daily information needs involve learning about a broad topic. That’s why today we’re introducing new search results to help users find in-depth articles.

These results are ranked algorithmically based on many signals that look for high-quality, in-depth content. You can help our algorithms understand your pages better by following these recommendations:

Following these best practices along with our webmaster guidelines helps our systems to better understand your website’s content, and improves the chances of it appearing in this new set of search results.

The in-depth articles feature is rolling out now on google.com in English. For more information, check out our help center article, and feel free to post in the comments in our forums.

Users often turn to Google to answer a quick question, but research suggests that up to 10% of users’ daily information needs involve learning about a broad topic. That’s why today we’re introducing new search results to help users find in-depth articles.

These results are ranked algorithmically based on many signals that look for high-quality, in-depth content. You can help our algorithms understand your pages better by following these recommendations:

- use schema.org “article” markup,

- provide authorship markup,

- rel=next and rel=prev for paginated articles (also watch out for common rel=canonical mistakes),

- provide information about your organization’s logo,

- and of course, create compelling in-depth content.

Following these best practices along with our webmaster guidelines helps our systems to better understand your website’s content, and improves the chances of it appearing in this new set of search results.

The in-depth articles feature is rolling out now on google.com in English. For more information, check out our help center article, and feel free to post in the comments in our forums.

.jpg)